Lightning-fast BUILD file generation with Gazelle lazy indexing¶

This is the blog post edition of my BazelCon 2025 talk. You can watch the talk on YouTube below or read the slides and transcript here.

A brief history of Gazelle¶

I thought I'd start with the history of Gazelle. First, where did the name come from? Inside Google, there was a tool called Glaze, which generated BUILD files for Go packages. It listed the files in the current directory, parsed their package names and imports, and updated the BUILD file without needing to look at anything else. That was possible because Google's monorepo was structured to allow a simple translation between Go imports and Bazel labels. It was very fast, like 50ms, so people usually configured their editors to run it whenever they saved a file. So Go users could mostly ignore BUILD files altogether. So Glaze is to Blaze as Gazelle is to Bazel. I hope Gazelle can be as fast some day, but because the world outside Google's monorepo is complicated, we have a lot more to do.

Gazelle was first introduced in 2016 by Yuki Yugui Sonoda to be used when fetching external Go projects. I've never met her, but I hope she's pleased with what we've built since then.

2017 was when I joined the Go Tools team along with Ian Cottrell. We both worked on rules_go and Gazelle to improve Bazel for Kubernetes.

In 2018, I added Gazelle's extension interface so it could support other languages, at least so Go and protobuf could be decoupled.

In 2020, Andrew Allen contributed the first new extension, generating bzl_library rules.

In 2023, Gazelle gained support for Bzlmod, which is actually a simplification from what it did for WORKSPACE.

And this year, we have lazy indexing, and we're discussing a 2.0 release with changes to the extension interface.

How does Gazelle work?¶

Before we get to lazy indexing, it helps to have a model of how Gazelle works. You can run it either in a single directory or across the whole repo. The first pass is to parse BUILD files and read configuration written as comments. Gazelle then reads source files and generates rules it thinks should be present and merges them with existing rules. As part of this pass, Gazelle builds an in-memory index of all library rules. It needs to read all BUILD files in the repo to do that, even if it was only asked to update one directory. Once the index is built, as a second pass, Gazelle performs dependency resolution, where it maps import strings to Bazel labels, setting the deps attribute for each generated rule. These get merged again, then Gazelle formats and writes out the BUILD files.

Isn't that slow?¶

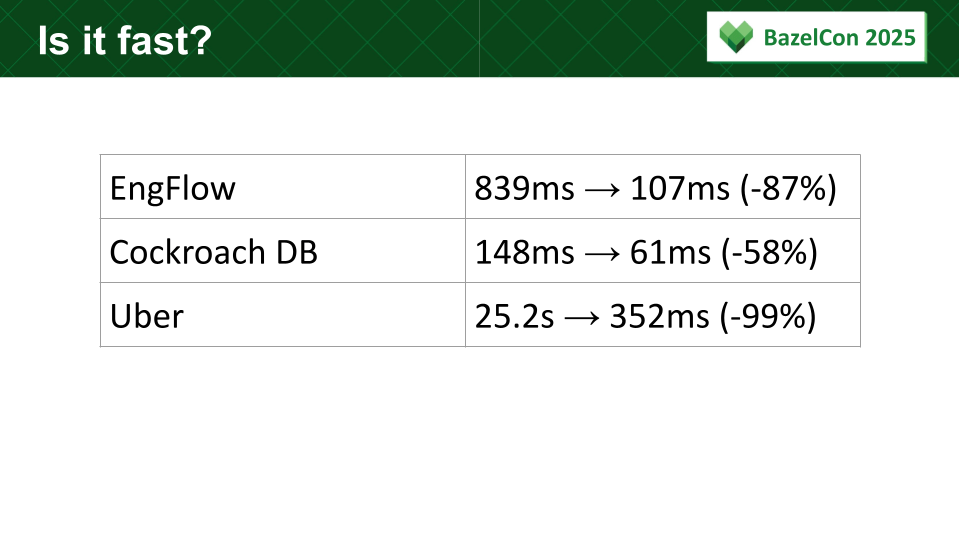

So, I mentioned Gazelle needs to index all the BUILD files in the repo, even if you only edited one source file. This can be very slow, and it varies a lot between repos. Here's a sample of how long this indexing takes:

- For Kubernetes, back in 2018, it took up to 30 seconds, and it was one of the reasons they were unhappy with Bazel.

- For EngFlow, it takes 839ms.

- For Cockroach Labs, it takes 148ms.

- Within Uber's monorepo, it takes 25s.

This all depends on how big your repo is, what directories you've excluded, and what extensions you're using. Uber has a big repo and a lot of extensions.

Why is it like this?¶

Why did we do indexing this way? After all, Glaze didn't need any indexing. But as I said, the world outside Google is complicated. Before Go modules, vendor directories could be nested anywhere, so there wasn't a 1:1 correspondence between import strings and directories. Protobuf was worse - you could have a directory of protos anywhere. So when you can't do a pure textual transformation, and you need to know where things are located in the repo, indexing solves the problem. Most open source repos are small enough that the delay was acceptable. Only marginally so for Kubernetes.

Lazy indexing¶

We can do better, which brings me to lazy indexing. Most directories and import strings have some structure. So even though we can't do a purely textual transformation from import string to label, we would like for Gazelle to index a small number of directories when it sees an import string. For example, suppose we're in module example.com/m, and we're importing example.com/m/foo/bar. Gazelle should look in the directory foo/bar. Or say we're importing a library outside our module, example.com/p/q. Gazelle should look in our vendor directory.

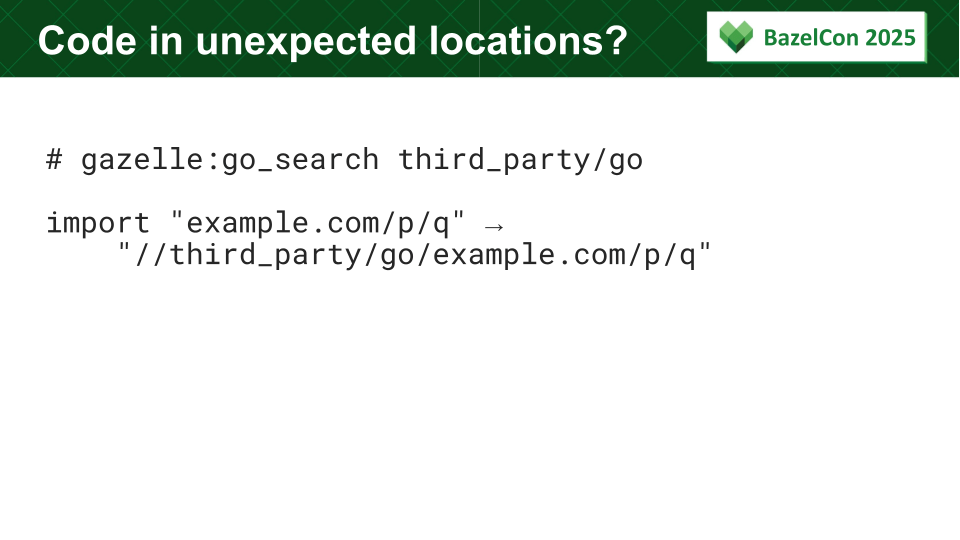

Code in unexpected locations?¶

That's an easy case. What if we have a vendor directory with a non-standard name, like third_party/go? Well, you can tell Gazelle about it with a go_search directive. This just adds a directory to a list. So now when we import example.com/p/q, Gazelle looks in third_party/go in addition to our vendor directory.

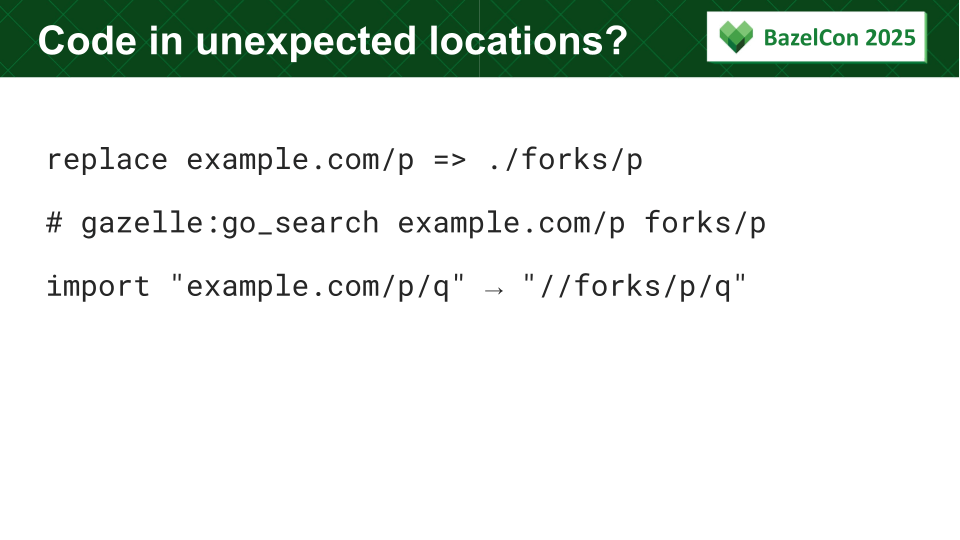

How about something narrower? Maybe we created a fork of our dependency example.com/p, copying it into a directory that doesn't have its full path, forks/p. The go_search directive can strip a prefix from the path for you. This says if an import has the prefix example.com/p, then look in the forks/p directory, not including the prefix.

How does it work?¶

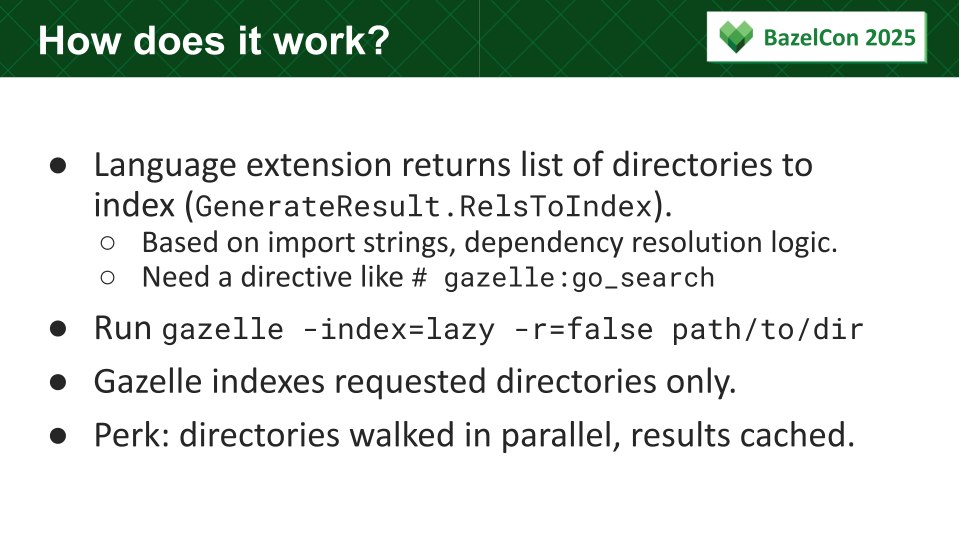

So how does this work? Gazelle needs some language-specific intelligence. So, each extension needs to support lazy indexing by returning a list of directories to index, based on import strings seen in source files. Each extension can define a search directive like go_search so that users have a way to say where their libraries are. Once that's in place, you run Gazelle in a specific directory with index=lazy and r=false, which disables recursion. Gazelle then only indexes directories it was told to update plus directories requested by extensions plus their parent directories, since it needs read configuration from those anyway. But it no longer needs to scan all BUILD files in the whole repo.

As an added benefit, Gazelle now reads directory metadata in parallel and caches it in memory, whether or not you're using lazy indexing. Thanks to Jason Bedard for doing a lot of that work.

Is it fast?¶

With those changes, Gazelle now runs considerably faster in most repos with very little additional configuration. With a few go_search directives, in EngFlow, we got down to 107ms. Uber went down to 352ms, a 99% improvement. I should add, these numbers are running the Gazelle binary directly. When you invoke it with bazel run, that adds 500-1000ms.

Speed is the killer feature¶

Why does this matter? Speed is an important feature for interactive tools. It keeps you productive, it keeps you focused, it changes how you feel about a tool, and it changes how you use it. If it takes 50ms, you can run Gazelle automatically when you save a file. If it's 10s, you only run it when you have to. If it's 100s, you might validate your BUILD files in CI, but you'll make most of your edits manually. And if it's 1000s, don't even bother. If a tool is fast, you'll use it for more things. It's more useful.

What's next?¶

What comes next? We'll add support for lazy indexing in more open source extensions. It's in Go, proto, and C++ already. If you maintain an extension, public or private, I hope you add it, and I'm happy to work with you. Gazelle has a documentation page for writing extensions, and there's a section there saying what to do. I mentioned in the keynote we're discussing a 2.0 release of Gazelle, and we'll likely enable lazy indexing by default in that release.

Thanks for reading! Special thanks Alex Torok for proposing the idea for lazy indexing originally.